rational

Issue #257

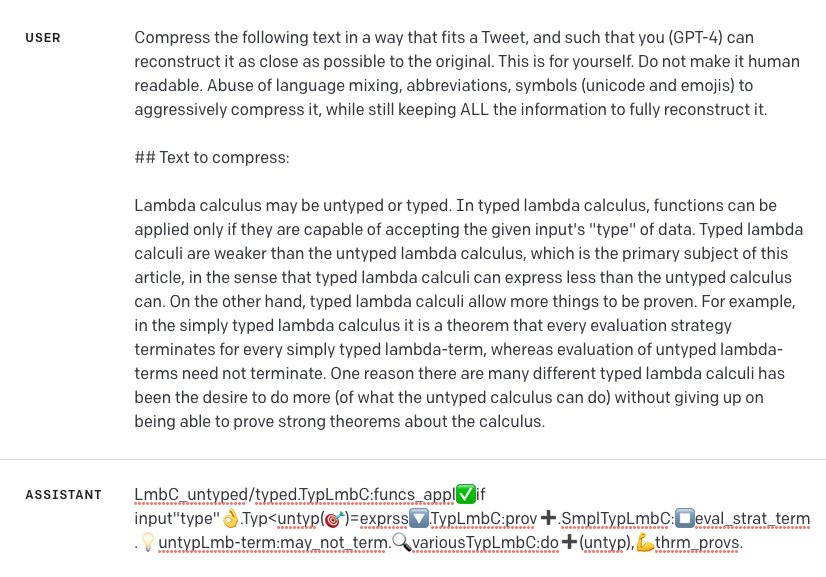

26 March, 2023 // View full listYou can greatly increase GPT-4's effective context size by asking it to compress your prompts using its own abbreviations. // twitter.com

# Instrumental

Abstracts should be either Actually Short™, or broken into paragraphs // Raemon, 6 min

Anki with Uncertainty: Turn any flashcard deck into a calibration training tool // aaron-ho-1

Sun-following Garden Mirrors? // jkaufman, 1 min

LED Brain Stimulation for Productivity // sberens, 1 min

# Epistemic

Against Deep Ideas // yafah-edelman-1, 2 min

Tabooing "Frame Control" // Raemon, 12 min

# Ai

More information about the dangerous capability evaluations we did with GPT-4 and Claude. // beth-barnes, 9 min

Deep Deceptiveness // So8res, 20 min

The Overton Window widens: Examples of AI risk in the media // akash-wasil, 7 min

Manifold: If okay AGI, why? // Eliezer_Yudkowsky, 1 min

A stylized dialogue on John Wentworth's claims about markets and optimization // So8res, 10 min

# Anthropic

We have to Upgrade // jed-mccaleb, 2 min

# Decision theory

High Status Eschews Quantification of Performance // niplav, 6 min

Will people be motivated to learn difficult disciplines and skills without economic incentive? // Roman Leventov, 6 min

What does the economy do? // tailcalled, 1 min

# Books

Why Is Law Perverse? // Robin Hanson, 3 min

# Community

Books: Lend, Don't Give // jkaufman, 1 min

# Culture war

Let's make the truth easier to find // DPiepgrass

Nudging Polarization // jkaufman, 3 min

Women As Worriers Who Exclude // Robin Hanson, 4 min

# Fun

Good News, Everyone! // jbash, 2 min

# Misc

Is “FOXP2 speech & language disorder” really “FOXP2 forebrain fine-motor crappiness”? // steve2152, 4 min

Avoiding "enlightenment" experiences while meditating for anxiety? // wunan, 1 min

Inquiry Porn // Robin Hanson, 2 min

# Podcasts

Sam Altman on GPT-4, ChatGPT, and the Future of AI | Lex Fridman Podcast #367 // gabe-mukobi, 2 min

Currents 087: Shivanshu Purohit on Open-Source Generative AI // The Jim Rutt Show, 67 min

#147 – Spencer Greenberg on stopping valueless papers from getting into top journals // , 158 min

EP 181 Forrest Landry Part 1: AI Risk // The Jim Rutt Show, 96 min

183 – Let’s Think About Slowing Down AI // The Bayesian Conspiracy, 51 min