rational

Issue #238: Full List

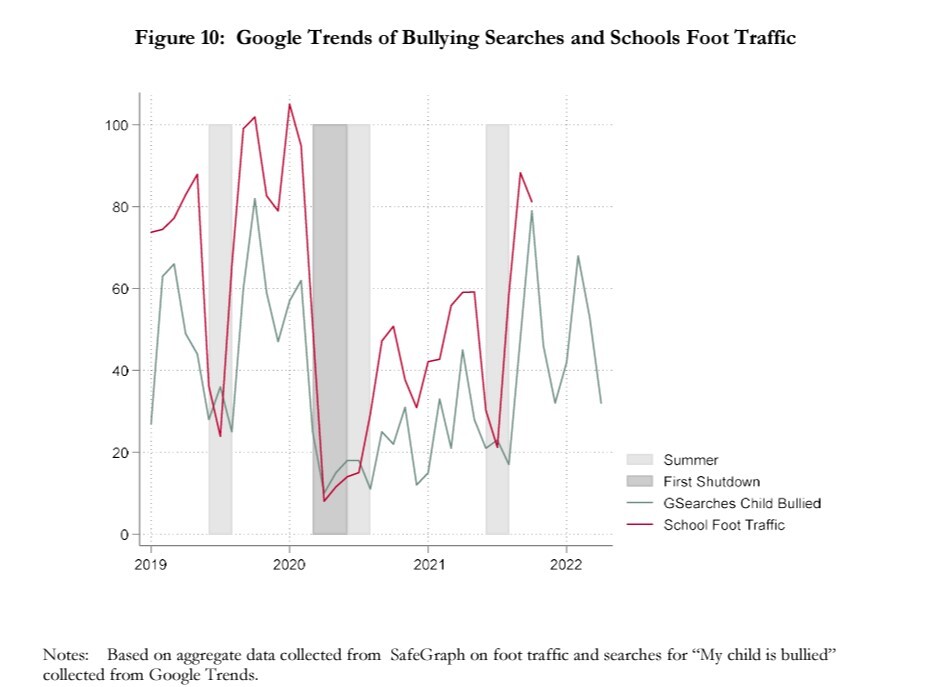

13 November, 2022 // View curated listWhen you cancel school (snow days, COVID, summer, holidays), kids stop committing so much suicide // twitter.com

# Instrumental

How to Make Easy Decisions // lynettebye, 2 min

# Epistemic

Word-Distance vs Idea-Distance: The Case for Lanoitaring // Sable, 9 min

Distillation Experiment: Chunk-Knitting // AllAmericanBreakfast, 7 min

Can People Be Honestly Wrong About Their Own Experiences? // Scott Alexander, 6 min

# Ai

Mysteries of mode collapse due to RLHF // janus, 14 min

How could we know that an AGI system will have good consequences? // So8res, 6 min

Applying superintelligence without collusion // Eric Drexler, 7 min

Trying to Make a Treacherous Mesa-Optimizer // MadHatter, 4 min

A philosopher's critique of RLHF // ThomasWoodside, 2 min

Instrumental convergence is what makes general intelligence possible // tailcalled, 4 min

Has anyone increased their AGI timelines? // Darren McKee, 1 min

Some advice on independent research // marius-hobbhahn, 12 min

A caveat to the Orthogonality Thesis // wuschel-schulz, 2 min

People care about each other even though they have imperfect motivational pointers? // TurnTrout, 8 min

Inverse scaling can become U-shaped // Edouard Harris, 1 min

Why I'm Working On Model Agnostic Interpretability // jessica-cooper, 2 min

A Walkthrough of Interpretability in the Wild (w/ authors Kevin Wang, Arthur Conmy & Alexandre Variengien) // neel-nanda-1, 3 min

fully aligned singleton as a solution to everything // carado-1, 1 min

4 Key Assumptions in AI Safety // Prometheus, 8 min

A first success story for Outer Alignment: InstructGPT // sharmake-farah, 1 min

Ways to buy time // akash-wasil, 14 min

Vanessa Kosoy's PreDCA, distilled // martinsq, 6 min

Hacker-AI – Does it already exist? // Erland, 12 min

The harnessing of complexity // geduardo, 3 min

LessWrong Poll on AGI // niclas-kupper, 1 min

Poster Session on AI Safety // neil-crawford

I there a demo of "You can't fetch the coffee if you're dead"? // [email protected], 1 min

Mesatranslation and Metatranslation // jdp, 13 min

Are funding options for AI Safety threatened? W45 // Steinthal, 3 min

Loss of control of AI is not a likely source of AI x-risk // squek, 6 min

[ASoT] Thoughts on GPT-N // ulisse-mini, 1 min

Is AI Gain-of-Function research a thing? // MadHatter, 2 min

The Interpretability Playground // esben-kran, 1 min

[ASoT] Instrumental convergence is useful // ulisse-mini, 1 min

Is full self-driving an AGI-complete problem? // kraemahz, 1 min

How likely are malign priors over objectives? [aborted WIP] // david-johnston, 9 min

What are some low-cost outside-the-box ways to do/fund alignment research? // TrevorWiesinger, 1 min

A Case for Model Agnostic Interpretability // jessica-cooper, 2 min

Is there some kind of backlog or delay for data center AI? // TrevorWiesinger, 1 min

# Decision theory

Rudeness, a useful coordination mechanic // Raemon, 3 min

The biological function of love for non-kin is to gain the trust of people we cannot deceive // chaosmage, 9 min

Internalizing the damage of bad-acting partners creates incentives for due diligence // tailcalled, 1 min

Adversarial Priors: Not Paying People to Lie to You // eva_, 3 min

Is there any discussion on avoiding being Dutch-booked or otherwise taken advantage of one's bounded rationality by refusing to engage? // shminux, 1 min

Musings on the appropriate targets for standards // tailcalled, 1 min

Do Timeless Decision Theorists reject all blackmail from other Timeless Decision Theorists? // myren, 3 min

Desiderata for an Adversarial Prior // shminux, 1 min

Has Pascal's Mugging problem been completely solved yet? // EniScien, 1 min

Preferred Unfair Evaluations // Robin Hanson, 2 min

Who Watches The Watchers? You. // Robin Hanson, 1 min

Why Not For-Profit Government? // Robin Hanson, 4 min

# Books

[Book Review] "Station Eleven" by Emily St. John Mandel // lsusr, 1 min

Highlights From The Comments On Brain Waves // Scott Alexander, 14 min

# Ea

We must be very clear: fraud in the service of effective altruism is unacceptable // evhub

Speculation on Current Opportunities for Unusually High Impact in Global Health // johnswentworth, 4 min

Opportunities that surprised us during our Clearer Thinking Regrants program // spencerg

# Community

I Converted Book I of The Sequences Into A Zoomer-Readable Format // dkirmani, 2 min

Women and Effective Altruism // p-g-keerthana-gopalakrishnan, 3 min

Thinking About Mastodon // jkaufman, 1 min

Charging for the Dharma // jchan, 5 min

EA (& AI Safety) has overestimated its projected funding — which decisions must be revised? // strawberry calm, 1 min

Value of Querying 100+ People About Humanity's Future // rodeo_flagellum, 1 min

Trying Mastodon // jkaufman, 1 min

Mastodon Linking Norms // jkaufman, 1 min

How do newcomers delve deeper into the community? // Lord Dreadwar, 1 min

Solstice 2022 Roundup // dspeyer, 1 min

# Culture war

What’s the Deal with Elon Musk and Twitter? // Zvi, 37 min

FTX will probably be sold at a steep discount. What we know and some forecasts on what will happen next // Nathan Young

Democracy Is in Danger, but Not for the Reasons You Think // ExCeph, 14 min

# Misc

What it's like to dissect a cadaver // OldManNick, 5 min Favorite

Exams-Only Universities // MathieuRoy, 2 min

What is epigenetics? // Metacelsus, 7 min

The optimal angle for a solar boiler is different than for a solar panel // yair-halberstadt, 2 min

Google Search as a Washed Up Service Dog: "I HALP!" // shminux, 1 min

divine carrot // OldManNick, 1 min

User-Controlled Algorithmic Feeds // jkaufman, 2 min

Intercept article about lab accidents // ChristianKl, 1 min

Why don't organizations have a CREAMO? // shminux, 1 min

Chord Notation // jkaufman, 1 min

What are examples of problems that were caused by intelligence, that couldn’t be solved with intelligence? // peter-o-malley, 1 min

Contra Resident Contrarian On Unfalsifiable Internal States // Scott Alexander, 22 min

# Podcasts

#140 – Bear Braumoeller on the case that war isn't in decline // , 167 min

# Rational fiction

[simulation] 4chan user claiming to be the attorney hired by Google's sentient chatbot LaMDA shares wild details of encounter // janus, 17 min