rational

Issue #234: Full List

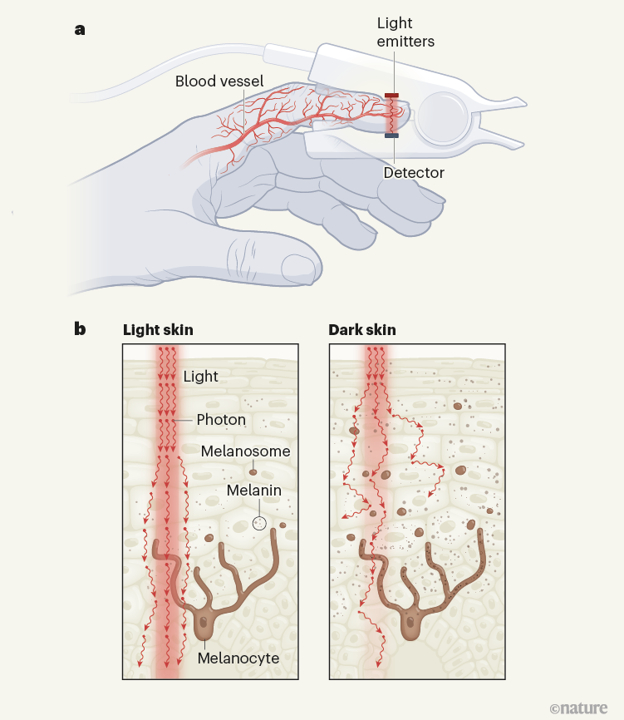

16 October, 2022 // View curated listSkin colour affects the accuracy of medical oxygen sensors: They are often less accurate for people with dark skin. // www.nature.com

# Coronavirus

Covid 10/13/22: Just the Facts // Zvi, 11 min

How much of China's Zero COVID policy is actually about COVID? // jmh, 1 min

# Instrumental

I learn better when I frame learning as Vengeance for losses incurred through ignorance, and you might too // chaosmage, 4 min

A game of mattering // KatjaGrace, 5 min

Be more effective by learning important practical knowledge using flashcards // markus-stenemo

# Epistemic

A common failure for foxes // RobbBB, 2 min

Calibration of a thousand predictions // KatjaGrace, 5 min

What if human reasoning is anti-inductive? // Q Home, 16 min

When should you defer to expertise? A useful heuristic (Crosspost from EA forum) // sharmake-farah, 2 min

# Ai

Counterarguments to the basic AI x-risk case // KatjaGrace, 41 min

Lessons learned from talking to >100 academics about AI safety // marius-hobbhahn, 14 min

Niceness is unnatural // So8res, 10 min

Contra shard theory, in the context of the diamond maximizer problem // So8res, 3 min

Possible miracles // akash-wasil, 9 min

Alignment 201 curriculum // ricraz, 1 min

Help out Redwood Research’s interpretability team by finding heuristics implemented by GPT-2 small // haoxing-du, 4 min

Another problem with AI confinement: ordinary CPUs can work as radio transmitters // RomanS, 1 min

Building a transformer from scratch - AI safety up-skilling challenge // marius-hobbhahn, 5 min

Anonymous advice: If you want to reduce AI risk, should you take roles that advance AI capabilities? // 80000hours

You are better at math (and alignment) than you think // TrevorWiesinger, 27 min

When reporting AI timelines, be clear who you're (not) deferring to // Sam Clarke

Instrumental convergence in single-agent systems // Edouard Harris, 11 min

Natural Categories Update // logan-zoellner, 1 min

A stubborn unbeliever finally gets the depth of the AI alignment problem // aelwood, 3 min

Uncontrollable AI as an Existential Risk // Karl von Wendt, 24 min

Results from the language model hackathon // esben-kran, 4 min

Let’s talk about uncontrollable AI // Karl von Wendt, 3 min

Instrumental convergence: single-agent experiments // Edouard Harris, 11 min

[Crosspost] AlphaTensor, Taste, and the Scalability of AI // jamierumbelow, 1 min

Misalignment-by-default in multi-agent systems // Edouard Harris, 29 min

Greed Is the Root of This Evil // Thane Ruthenis, 10 min

My argument against AGI // cveres

A strange twist on the road to AGI // cveres

Don't expect AGI anytime soon // cveres

Best resource to go from "typical smart tech-savvy person" to "person who gets AGI risk urgency"? // Liron, 1 min

Disentangling inner alignment failures // ejenner, 5 min

Everything is a Pattern, But Some Are More Complex Than Others... // damien-lasseur, 11 min

"AGI soon, but Narrow works Better" // AnthonyRepetto, 2 min

Objects in Mirror are Closer Than They Appear // damien-lasseur, 11 min

Misalignment Harms Can Be Caused by Low Intelligence Systems // DialecticEel, 1 min

Power-Seeking AI and Existential Risk // antonio-franca, 10 min

Instrumental convergence: scale and physical interactions // Edouard Harris, 21 min

Cataloguing Priors in Theory and Practice // paulbricman, 8 min

Article Review: Google's AlphaTensor // Robert_AIZI, 13 min

Previous Work on Recreating Neural Network Input from Intermediate Layer Activations // bglass, 1 min

# Meta-ethics

Against the normative realist's wager // joekc, 39 min

Utility In Faith? // Matt Goldwater, 6 min

# Longevity

Vegetarianism and depression // Maggy

# Anthropic

some simulation hypotheses // carado-1, 5 min

# Decision theory

Actually, All Nuclear Famine Papers are Bunk // derpherpize, 2 min

That one apocalyptic nuclear famine paper is bunk // derpherpize, 1 min

Why I think nuclear war triggered by Russian tactical nukes in Ukraine is unlikely // dave-orr, 3 min

Why So Many Cookie Banners? // jkaufman, 2 min

Vehicle Platooning - a real world examination of the difficulties in coordination // M. Y. Zuo, 2 min

Does biology reliably find the global maximum, or at least get close? // sharmake-farah, 1 min

[Sketch] Validity Criterion for Logical Counterfactuals // DragonGod, 6 min

Complex Impact Futures // Robin Hanson, 4 min

More Academic Prestige Futures // Robin Hanson, 3 min

# Math and cs

Six (and a half) intuitions for KL divergence // TheMcDouglas, 13 min

# Books

From technocracy to the counterculture // jasoncrawford, 31 min

# Ea

We can do better than argmax // Jan_Kulveit

# Fun

AMC's animated series "Pantheon" is relevant to our interests // ben-smith

# Misc

Transformative VR Is Likely Coming Soon // jimrandomh, 2 min

The Balto/Togo theory of scientific development // pktechgirl, 2 min

Cleaning a Spoon is Complex // jkaufman, 1 min

Bounded distrust or Bounded trust? // M. Y. Zuo, 3 min

Metaculus Launches the 'Forecasting Our World In Data' Project to Probe the Long-Term Future // ChristianWilliams

Feelings // oren-montano, 11 min

Towards a comprehensive study of potential psychological causes of the ordinary range of variation of affective gender identity in males // tailcalled, 46 min

Replace G.P.A. With G.P.C.? // Robin Hanson, 4 min

# Podcasts

Did you enjoy Ramez Naam's "Nexus" trilogy? Check out this interview on neurotech and the law. // fowlertm, 1 min

Preventing an AI-related catastrophe (Article) // , 144 min

EP 167 Bruce Damer on the Origins of Life // The Jim Rutt Show, 116 min

Currents 071: Liam Madden on Rebirthing Democracy // The Jim Rutt Show, 71 min

# Rational fiction

The Epiphany of Mrs. Kugla // alexbeyman, 21 min

Perfect Enemy // alexbeyman, 56 min

The Space Between Moments // alexbeyman, 21 min

The Slow Reveal // alexbeyman, 30 min

# Videos of the week

Balaji Srinivasan: How to Fix Government, Twitter, Science, and the FDA | Lex Fridman Podcast #331 // Lex Fridman, 467 min