rational

Issue #227: Full List

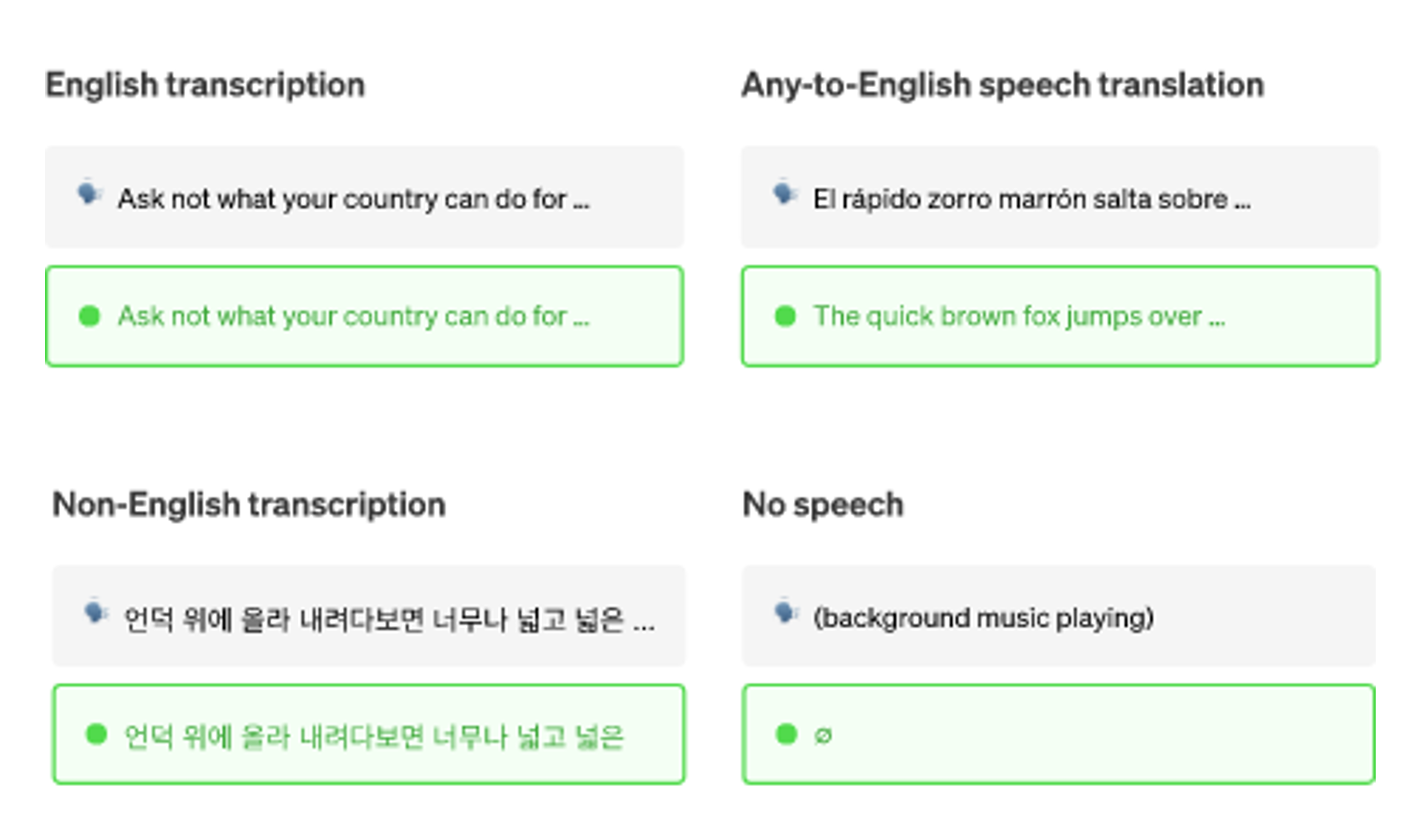

28 August, 2022 // View curated listWhisper: Near human-level speech recognition, open-sourced. // openai.com

# Instrumental

Nate Soares' Life Advice // CatGoddess, 3 min

Ways to increase working memory, and/or cope with low working memory? // NicholasKross, 1 min

# Epistemic

Embracing the Opposition's Point // Yulia, 6 min

Please (re)explain your personal jargon // nathan-helm-burger, 4 min

We See The Sacred From Afar, To See It Together // Robin Hanson, 4 min

# Ai

Some conceptual alignment research projects // ricraz, 3 min

AI art isn't "about to shake things up". It's already here. // Davis_Kingsley, 3 min

AGI Timelines Are Mostly Not Strategically Relevant To Alignment // johnswentworth, 1 min

The Shard Theory Alignment Scheme // David Udell, 2 min

Finding Goals in the World Model // jeremy-gillen, 14 min

Annual AGI Benchmarking Event // Lawrence Phillips, 2 min

Beyond Hyperanthropomorphism // PointlessOne, 1 min

AI Risk in Terms of Unstable Nuclear Software // Thane Ruthenis, 8 min

What's the Most Impressive Thing That GPT-4 Could Plausibly Do? // bayesed, 1 min

What if we solve AI Safety but no one cares // 142857, 1 min

Stable Diffusion has been released // P., 1 min

My Plan to Build Aligned Superintelligence // apollonianblues, 9 min

How evolution succeeds and fails at value alignment // Ocracoke, 5 min

Seeking Student Submissions: Edit Your Source Code Contest // Aris, 2 min

A Test for Language Model Consciousness // ethan-perez, 10 min

Solving Alignment by "solving" semantics // Q Home, 31 min

What would you expect a massive multimodal online federated learner to be capable of? // alenglander, 1 min

The Alignment Problem Needs More Positive Fiction // Netcentrica, 6 min

Why does AGI need a utility function? // randomstring, 1 min

AI Box Experiment: Are people still interested? // Double, 1 min

Help Understanding Preferences And Evil // Netcentrica, 2 min

Preparing for the apocalypse might help prevent it // Ocracoke, 1 min

AI alignment as “navigating the space of intelligent behaviour” // Nora_Ammann, 6 min

Evaluating OpenAI's alignment plans using training stories // ojorgensen, 5 min

Discussion on utilizing AI for alignment // elifland, 1 min

Timelines ARE relevant to alignment research (timelines 2 of ?) // nathan-helm-burger, 7 min

Is there a benefit in low capability AI Alignment research? // Adzusa, 2 min

# Anthropic

Is population collapse due to low birth rates a problem? // adrian-arellano-davin, 1 min

# Math and cs

Basin broadness depends on the size and number of orthogonal features // TheMcDouglas, 8 min

Variational Bayesian methods // ege-erdil, 9 min

# Books

[Review] The Problem of Political Authority by Michael Huemer // arjun-panickssery, 14 min

Your Book Review: Kora In Hell // Scott Alexander, 13 min

Book Review: What We Owe The Future // Scott Alexander, 28 min

# Ea

Effective Altruism As A Tower Of Assumptions // Scott Alexander, 4 min

# Community

Your posts should be on arXiv // JanBrauner, 3 min

Double Crux In A Box // Screwtape, 4 min

# Misc

On Car Seats as Contraception // Zvi, 43 min

# Podcasts

AXRP Episode 17 - Training for Very High Reliability with Daniel Ziegler // DanielFilan, 43 min

EP 163 Benedict Beckeld on Western Self-Contempt // The Jim Rutt Show, 94 min

c42: Script Draft 1, Beats 18-21 // Constellation, 45 min

169 – S&E BS re AGI // The Bayesian Conspiracy, 132 min

# Rational fiction

everything is okay // carado-1, 8 min

The Bunny: An EA Short Story // JohnGreer, 7 min

# Videos of the week

OpenAI CEO Sam Altman | AI for the Next Era // Greylock, 35 min