rational

Issue #195: Full List

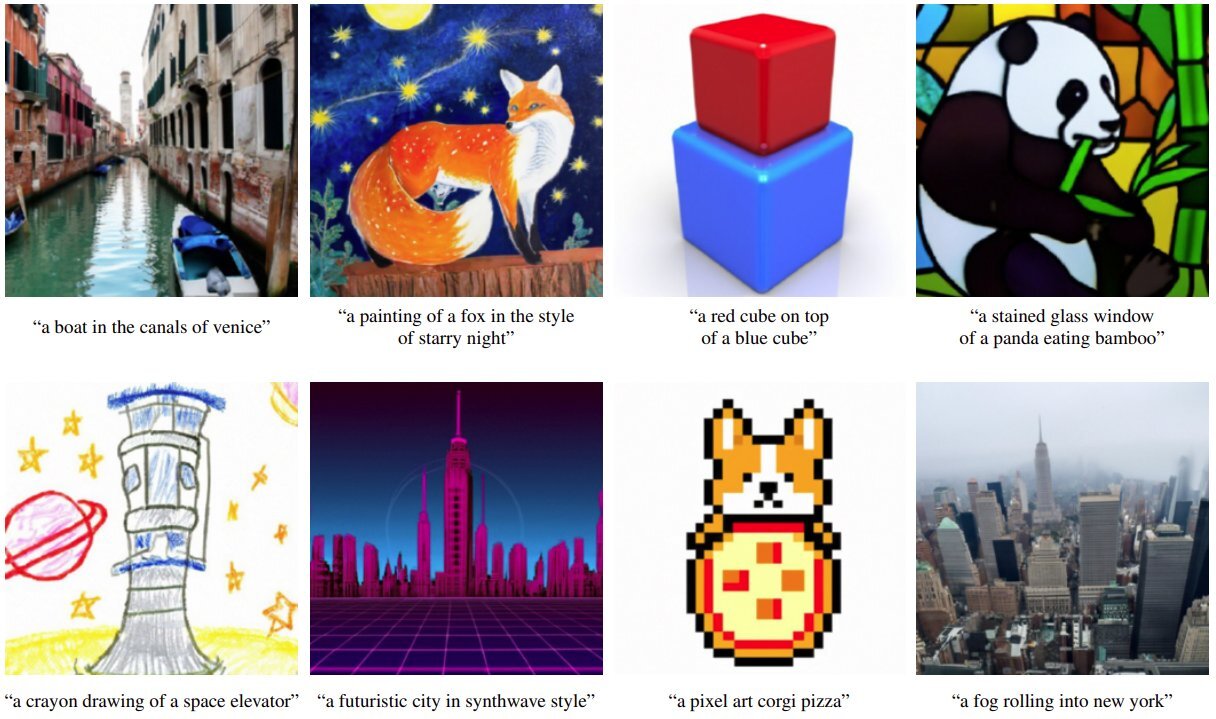

19 December, 2021 // View curated listExtraordinary rate of progress in image generation from text, able to do "client revisions". // twitter.com

# Coronavirus

Omicron Post #7 // Zvi, 15 min

Omicron Post #6 // Zvi, 9 min

Covid 12/16: On Your Marks // Zvi, 11 min

Understand the exponential function: R0 of the COVID // yandong-zhang, 1 min

Immune senescence, Christian theology, and the Spike protein // Josh Mitteldorf, 9 min

# Instrumental

How I became a person who wakes up early // Alex_Altair, 4 min

Blog Respectably // lsusr, 1 min

# Epistemic

Perishable Knowledge // lsusr, 3 min

Is "gears-level" just a synonym for "mechanistic"? // capybaralet, 1 min

The "Other" Option // jsteinhardt, 8 min

How the Equivalent Bet Test Actually Works // Erich_Grunewald, 5 min

The Phrase "No Evidence" Is A Red Flag For Bad Science Communication // Scott Alexander, 5 min

# Ai

Teaser: Hard-coding Transformer Models // MadHatter, 1 min

Where can one learn deep intuitions about information theory? // Valentine, 2 min

My Overview of the AI Alignment Landscape: A Bird's Eye View // neel-nanda-1, 18 min

ARC's first technical report: Eliciting Latent Knowledge // paulfchristiano, 1 min

Some abstract, non-technical reasons to be non-maximally-pessimistic about AI alignment // RobbBB, 8 min

Consequentialism & corrigibility // steve2152, 8 min

Redwood's Technique-Focused Epistemic Strategy // adamShimi, 8 min

Introducing the Principles of Intelligent Behaviour in Biological and Social Systems (PIBBSS) Fellowship // adamShimi, 11 min

ARC is hiring! // paulfchristiano, 1 min

Reviews of “Is power-seeking AI an existential risk?” // joekc, 1 min

Hard-Coding Neural Computation // MadHatter, 24 min

The Natural Abstraction Hypothesis: Implications and Evidence // TheMcDouglas, 23 min

Understanding and controlling auto-induced distributional shift // LRudL, 20 min

Elicitation for Modeling Transformative AI Risks // Davidmanheim, 10 min

Should we rely on the speed prior for safety? // Marc-Everin Carauleanu, 6 min

Important ML systems from before 2012? // Jsevillamol, 1 min

Universality and the “Filter” // maggiehayes, 13 min

What’s the backward-forward FLOP ratio for Neural Networks? // marius-hobbhahn, 8 min

Disentangling Perspectives On Strategy-Stealing in AI Safety // shawnghu, 13 min

Motivations, Natural Selection, and Curriculum Engineering // Oliver Sourbut, 50 min

Interlude: Agents as Automobiles // daniel-kokotajlo, 5 min

Solving Interpretability Week // elriggs, 1 min

Summary of the Acausal Attack Issue for AIXI // Diffractor, 5 min

Evidence Sets: Towards Inductive-Biases based Analysis of Prosaic AGI // bayesian_kitten, 25 min

[Resolved] Who else prefers "AI alignment" to "AI safety?" // Evan_Gaensbauer, 1 min

DL towards the unaligned Recursive Self-Optimization attractor // jacob_cannell, 4 min

Framing approaches to alignment and the hard problem of AI cognition // ryan_greenblatt, 34 min

Some motivations to gradient hack // peterbarnett, 7 min

What’s the backward-forward FLOP ratio for NNs? // marius-hobbhahn, 9 min

Exploring Decision Theories With Counterfactuals and Dynamic Agent Self-Pointers // JoshuaOSHickman, 5 min

Ngo’s view on alignment difficulty // Rob Bensinger, 21 min

# Meta-ethics

Universal counterargument against “badness of death” is wrong // avturchin, 1 min

# Anthropic

Nuclear war anthropics // smountjoy, 1 min

Why do governments refer to existential risks primarily in terms of national security? // Evan_Gaensbauer, 1 min

We Don’t Have To Die // Robin Hanson, 5 min

# Decision theory

More power to you // jasoncrawford, 1 min

For and Against Lotteries in Elite University Admissions // sam-enright, 3 min

Housing Markets, Satisficers, and One-Track Goodhart // Jemist, 2 min

Decision Theory Breakdown—Personal Attempt at a Review // Jake Arft-Guatelli, 10 min

Venture Granters, The VCs of public goods, incentivizing good dreams // MakoYass, 13 min

# Ea

Zvi’s Thoughts on the Survival and Flourishing Fund (SFF) // Zvi, 77 min

# Community

Occupational Infohazards // jessica.liu.taylor, 57 min

Enabling More Feedback for AI Safety Researchers // frances_lorenz, 3 min

Mystery Hunt 2022 // Scott Garrabrant, 1 min

In Defense of Attempting Hard Things, and my story of the Leverage ecosystem // Cathleen

# Culture war

We'll Always Have Crazy // Duncan_Sabien, 15 min

# Misc

Leverage // lsusr, 1 min

The end of Victorian culture, part II: people and history // david-hugh-jones, 8 min

What Caplan’s "Missing Mood" Heuristic Is Really For // AllAmericanBreakfast, 4 min

Virulence Management // harsimony, 4 min

A proposed system for ideas jumpstart // Just Learning, 3 min

How Group Minds Differ // Robin Hanson, 3 min

Ancient Plagues // Scott Alexander, 6 min

What Hypocrisy Feels Like // Robin Hanson, 3 min

# Podcasts

#118 - Jaime Yassif on safeguarding bioscience to prevent catastrophic lab accidents and bioweapons development // , 135 min

YANSS 221 – How a science educator in Oklahoma uses a portable planetarium to convert flat earthers //

EP 149 Joshua Vial on Enspiral // The Jim Rutt Show, 86 min

# Rational fiction

Analysis of Bird Box (2018) // TekhneMakre, 5 min

# Videos of the week

The Enduring Mystery of the Creator of Bitcoin // Jake Tran, 20 min