rational

Issue #100: Full List

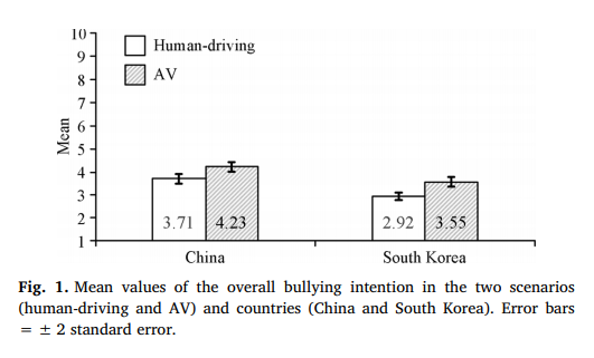

23 February, 2020 // View curated listSelf-driving cars will likely be bullied and taken advantage of, because of their gentle and overcautious disposition. // drive.google.com

# Instrumental

Training Regime Day 3: Tips and Tricks // Mark Xu, 13 min

Training Regime Day 2: Searching for bugs // Mark Xu, 3 min

Training Regime Day 8: Noticing // Mark Xu, 3 min

Training Regime Day 5: TAPs // Mark Xu, 8 min

Training Regime Day 4: Murphyjitsu // Mark Xu, 8 min

Training Regime Day 7: Goal Factoring // Mark Xu, 5 min

You are an optimizer. Act like it! // agentydragon, 2 min

Training Regime Day 6: Seeking Sense // Mark Xu, 8 min

Sleep Support: An Individual Randomized Controlled Trial // Scott Alexander, 4 min

# Epistemic

On characterizing heavy-tailedness // Jsevillamol, 5 min

# Ai

Curiosity Killed the Cat and the Asymptotically Optimal Agent // michaelcohen, 1 min

On unfixably unsafe AGI architectures // steve2152, 6 min

Attainable Utility Preservation: Concepts // TurnTrout, 1 min

[Link and commentary] The Offense-Defense Balance of Scientific Knowledge: Does Publishing AI Research Reduce Misuse? // MichaelA, 3 min

Wireheading and discontinuity // Michele Campolo, 3 min

On the falsifiability of hypercomputation, part 2: finite input streams // jessicata, 4 min

Will AI undergo discontinuous progress? // SDM, 24 min

Goal-directed = Model-based RL? // adamShimi, 3 min

Stuck Exploration // Chris_Leong, 1 min

Counterfactuals versus the laws of physics // Stuart_Armstrong, 1 min

Gary Marcus: Four Steps Towards Robust Artificial Intelligence // Matthew Barnett, 5 min

Stepwise inaction and non-indexical impact measures // Stuart_Armstrong, 1 min

Subagents and impact measures: summary tables // Stuart_Armstrong, 1 min

What do you make of AGI:unaligned::spaceships:not enough food? // Brangus, 1 min

# Anthropic

Jan Bloch's Impossible War // Hivewired, 1 min Favorite

How to actually switch to an artificial body – Gradual remapping // George, 21 min

Deliberate Exposure Intuition // Robin Hanson, 5 min

# Math and cs

Tessellating Hills: a toy model for demons in imperfect search // DaemonicSigil, 2 min

Attainable Utility Preservation: Empirical Results // TurnTrout, nealeratzlaff, 11 min

UML XI: Nearest Neighbor Schemes // sil ver, 11 min

Indexical impact measures // Stuart_Armstrong, 7 min

(In)action rollouts // Stuart_Armstrong, 3 min

# Books

Set Ups and Summaries // Elizabeth, 5 min

# Ea

Jaan Tallinn's Philanthropic Pledge // jaan, 1 min

Why SENS makes sense // emanuele ascani, 37 min

Mapping downside risks and information hazards // MichaelA, 10 min

EA Kansas City planning meetup, discussion & open questions // samstowers, 2 min

Does donating to EA make sense in light of the mere addition paradox ? // George, 2 min

# Community

How to Lurk Less (and benefit others while benefiting yourself) // romeostevensit, 2 min

Blog Post Day (Unofficial) // Daniel Kokotajlo, 1 min

How do you survive in the humanities? // polymathwannabe, 2 min

Cambridge LW/SSC Meetup // AspiringRationalist, 1 min

# Culture war

Taking the Outgroup Seriously // Davis_Kingsley, 2 min

Plot Holes & Blame Holes // Robin Hanson, 5 min

# Misc

Making Sense of Coronavirus Stats // jmh, 1 min Favorite

What are information hazards? // MichaelA, 5 min

Is there an intuitive way to explain how much better superforecasters are than regular forecasters? // William_S, 1 min

How much delay do you generally have between having a good new idea and sharing that idea publicly online? // Mati_Roy, 1 min

Does anyone have a recommended resource about the research on behavioral conditioning, reinforcement, and shaping? // elityre, 1 min

Is there software for goal factoring? // crabman, 1 min

# Podcasts

We Want MoR (HPMOR Discussion Podcast) Completes Book One // moridinamael, 1 min

#187 — February 20, 2020 // Waking up with Sam Harris, 27 min

#186 — The Bomb // Waking up with Sam Harris, 79 min

# Videos of the week

How supply chain transparency can help the planet | Markus Mutz // TED, 13 min